What if you knew how toxic an account is before interacting with it online? Synthesized Social Signals computationally summarizes an account’s history to help users interact safely with strangers on social platforms.

Role

Lead UX Engineer —interaction design, UI & backend development (ML Perspective API, Twitter API)

Team

Jane Im, Taylor Denby, Dr Eshwar C., Dr. Eric Gilbert (Comp.Social Lab, UMich)

Timeline

Sep 2018 — Apr 2019

01. Problem

Around 4 out of 10 people in US have experienced online harassment of varying degrees — and half of them didn’t know the perpetrator. Around 1/4th of US adults have spread misinformation by first being exposed to it through someone they didn’t know.

Horrific online abuse on children’s gaming & social platforms (Source : NYtimes)

What if we can know an account is toxic before interacting with it online? We can avoid/mute/block toxic accounts before the situation worsens. It could also potentially safeguard children from the horrific online abuse in online gaming and social media platforms — where unknown predators pose to be friendly.

02. The Gap

Lack of existing social signals

It is hard to discern behaviors of people on social platforms. We argue that this is because there are not enough social signals. Online signals such as profile images, bio, number of followers etc. are features provided by platform designers that allow users to express themselves.

Example social signals on Facebook: a) profile image, b) cover image, c) bio, d) # of followers, e) # of friends and so on (Photo credit: Jane Im)

Ease of Faking

Compared to offline face-to-face interactions, the cues on social platforms are restricted to what the platform provides users with .More importantly, online social signals are super easy to fake. For instance, I can wear a wig on a date to pretend to be a blonde, but I can easily use a complete new face in an online dating site.

An example of how easy it is to fake online profiles

03. Opportunity

Social platforms do have an advantage over offline counterparts. It takes time and effort to know history of a person when you meet them the on a date — they do not bring it along when they enter the door, but social platforms have a rich history of an account’s behavior in form of comments, tweets, hashtags and so on. We created Synthesized Social Signals — computationally summarized account histories.

Bob’s account history goes through a series of algorithms A1,…,An to signal users of potential toxicity or misinformation

01. Reduces Receiver Cost

Because of the sheer number of historical posts an account can have, it would take lot of time & effort for a user to go through it and make a decision to follow/interact. Synthesized signals reduce this cost and effort for the user (receiver) by computing it and displaying it on the profile.

02. Difficult/High Cost to Fake

Synthesized signals are more difficult to fake. Since they are determined from 100s of posts, a bad actor will have to take lot of effort to delete a lot of past tweets/comments/history.

04. Iterations & Research

To demonstrate the concept of S3s, we built and published chrome browser extension called Sig. Sig computes and visualizes S3s on social platforms and renders them on profiles. We chose Twitter to demonstrate Sig, and toxicity and misinformation for our field study— two pressing problems on social platforms.

How can we help users safely interact with strangers and be transparent?

Research

To understand what signals Twitter users use to decide if they want to interact with strangers, we sent out an online survey on and received 60 responses. Users mostly used past tweets & replies to gauge whether they want to interact with strangers.

Results of 60 Twitter users indicating important of signals used while interacting with stranger accounts

Design to warn users of bad actors

When user goes to a profile, Sig pulls up last 200 tweets & replies and computationally summarizes if the account is safe or not safe to interact with. We augmented the bio section to include synthesized signals. We wanted a way to visually grab a user’s attention of bad actor, so an initial idea was overlay a vector image. But that idea was dismissed and we decided to use an encircling profile border to indicate problematic behavior in real time.

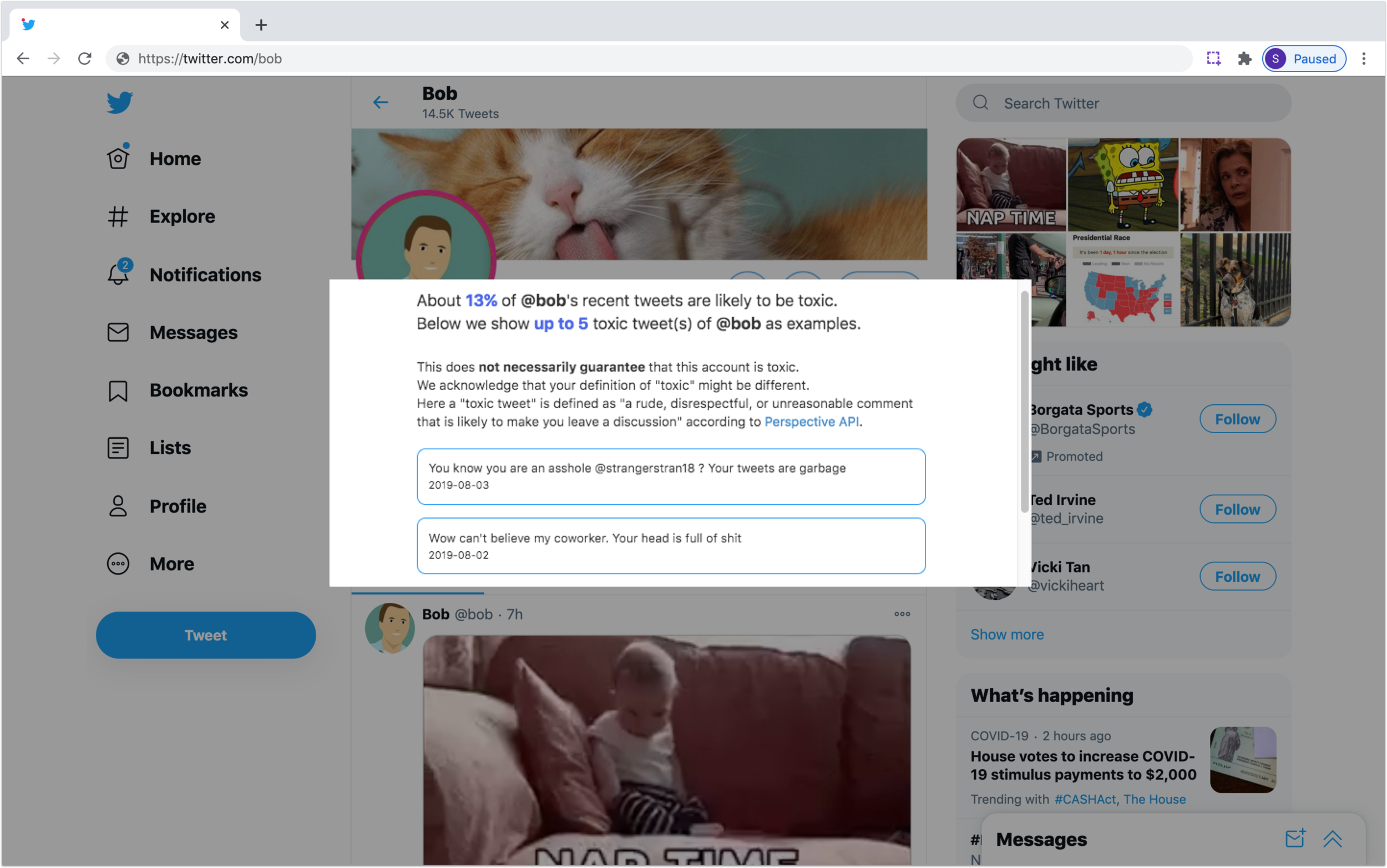

Design for transparency

We wanted to be transparent with users at every stage and learnt that users wanted to know what tweets are flagged as toxic/misinformation spreading. An early prototype displayed abusive words, but users were not comfortable seeing those words upfront. As we strengthened our backend from keyword matching to machine learning we thought of different ideas like a flagged tweets section, highlighting tweet in profile etc. to show evidence based claim.

How do users discover and interact with strangers?

Notification

Timeline

Design

We identified two main entry points where users discover and interact with new accounts — timeline and notifications (liking, following, mentioning). We designed Sig to encircle profile borders to indicate problematic behavior in real time. First, a blue border indicates that Sig is computing S3s for that account. After computation, it turns pink indicating account is toxic or spreading misinfo or disappears indicating account is safe for interaction.

Constraints

Since avg latency time to compute every account while scrolling through timeline was taking about 10 secs, we decided not to compute S3s for accounts a user already follows. We assumed that accounts user already follows are not be strangers.

How can we give users more agency?

Design

With quick iterations we learnt that different people have different tolerance for toxicity & misinformation and machine learning can have inherent limitations/biases, we decided to give users agency to set their own thresholds. Users can change these thresholds at anytime by clicking on the extension icon and moving the sliders.

05. Final

User Scenario

Michelle installs Sig on her chrome browser to help her interact safely with strangers on Twitter. She sets her toxicity and misinformation thresholds.

One of Michelle’s followers retweeted a tweet containing a meme. She thought the tweet was funny, but noticed Sig flagged the account that tweeted it.

She goes to check the account profile and finds it is marked as toxic.

By clicking on the “toxicity" tag, she discovers that many tweets were aggressive to others and included offensive racial slurs. Glad Sig prevented me from following the account, she thought while she quickly closed the profile page.

Algorithm

06. Field Study & Impact

We recruited 11 people who frequently use Twitter and asked them to use Sig while browsing Twitter for at least 30 minutes per day, over at least four days. We then conducted semi-structured interviews and an online survey.

01. Feeling safer

One of our biggest achievement was that participants said that they felt safer with Sig. These were all women and non-binary people — who are faced with online abuse more often than other groups.

“I would keep setting it low for general safety, and then if something seemed like it was flagged incorrectly, then I could just note that to myself. I’d rather risk that than it not flagging people it should.”

02. Conventional signals VS S3s

Probably the most interesting finding was that Sig identified accounts that have high number of followers or verified accounts (an indication of credibility) to be toxic or misinformation spreading.

“It was like I figured, cause she had a blue check mark and everything, I figured, and she’s a politician so it’s kind of funny when you see that [flagged by Sig as misinformation spreading].”

03. Aid in decision making — Block, Mute, Follow

Many participants used Sig to make decisions while interacting with stranger accounts. They enjoyed making this decision using Sig.

“Double check to see if the flagged tweets matched up with what I thought was a problem, which it usually did […] sometimes I’d mute or block them preemptively.”

04. Reducing receiver costs

6 participants said that Sig reduced their time & effort in identifying toxic accounts and all found evidence based claim useful in understanding why an account is toxic.

“…the extension would be a useful way for me to quickly get information without having to scroll back a zillion pages…”